About Me

My name is Lan Wu. I am an incoming Lecturer in Robotics (equivalent to Assistant Professor in the US) at the University of Western Australia (UWA) (starting February 2026). My research focuses on robotic probabilistic perception, mapping, scene understanding and representations to enable reliable and intelligent robot autonomy.

I received my PhD degree in robotics in 2023 from the Robotics Institute (RI) at the University of Technology Sydney (UTS), where I was supervised by Prof. Teresa Vidal-Calleja and A/Prof. Alen Alempijevic. Since completing my PhD, I have been working as a Postdoctoral Research Fellow at the UTS Robotics Institute.

- 1 paper in IEEE Transactions on Robotics (T-RO), first author

- 6 papers in IEEE Robotics and Automation Letters (RA-L)

- Multiple publications at ICRA and IROS

- In 2024, I was recognised as an RSS Pioneer, a distinction awarded to early-career robotics researchers worldwide

- I serve as a reviewer for T-RO, RA-L, JFR, ICRA and IROS

- I serve as Associate Editor for ICRA 2025 and 2026 in the Localisation and Mapping session

Research Interests

My research focuses on robotic perception, 3D scene understanding and representation, SLAM and mapping, dynamic environment modelling, and active perception. I also work with alternative and multi-modal sensing, including LiDAR, RGB-D, sonar and radar.

My long-term goal is to develop model-based, learning-based, or combined model- and learning-based representations that unify scene understanding and decision-making, enabling robots to operate reliably and intelligently in complex real-world environments.

Applications span advanced manufacturing, field robotics, human–robot collaboration, and assistive technologies such as bionic visual–spatial devices for people who are blind or vision-impaired.

- Robotic perception and 3D scene understanding

- SLAM and 3D mapping

- Dynamic environment modelling

- Active perception and view planning

- Robotic manipulation and motion planning

- Multi-modal sensor fusion (LiDAR, cameras, sonar, radar)

Key Publications (2020-2025)

[RA-L 2025] VDB-GPDF: Online Gaussian Process Distance Field with VDB Structure

Venue: IEEE Robotics and Automation Letters (RA-L), vol. 10, no. 1, pp. 374–381, 2025

Authors: Lan Wu, Cedric Le Gentil, Teresa Vidal-Calleja

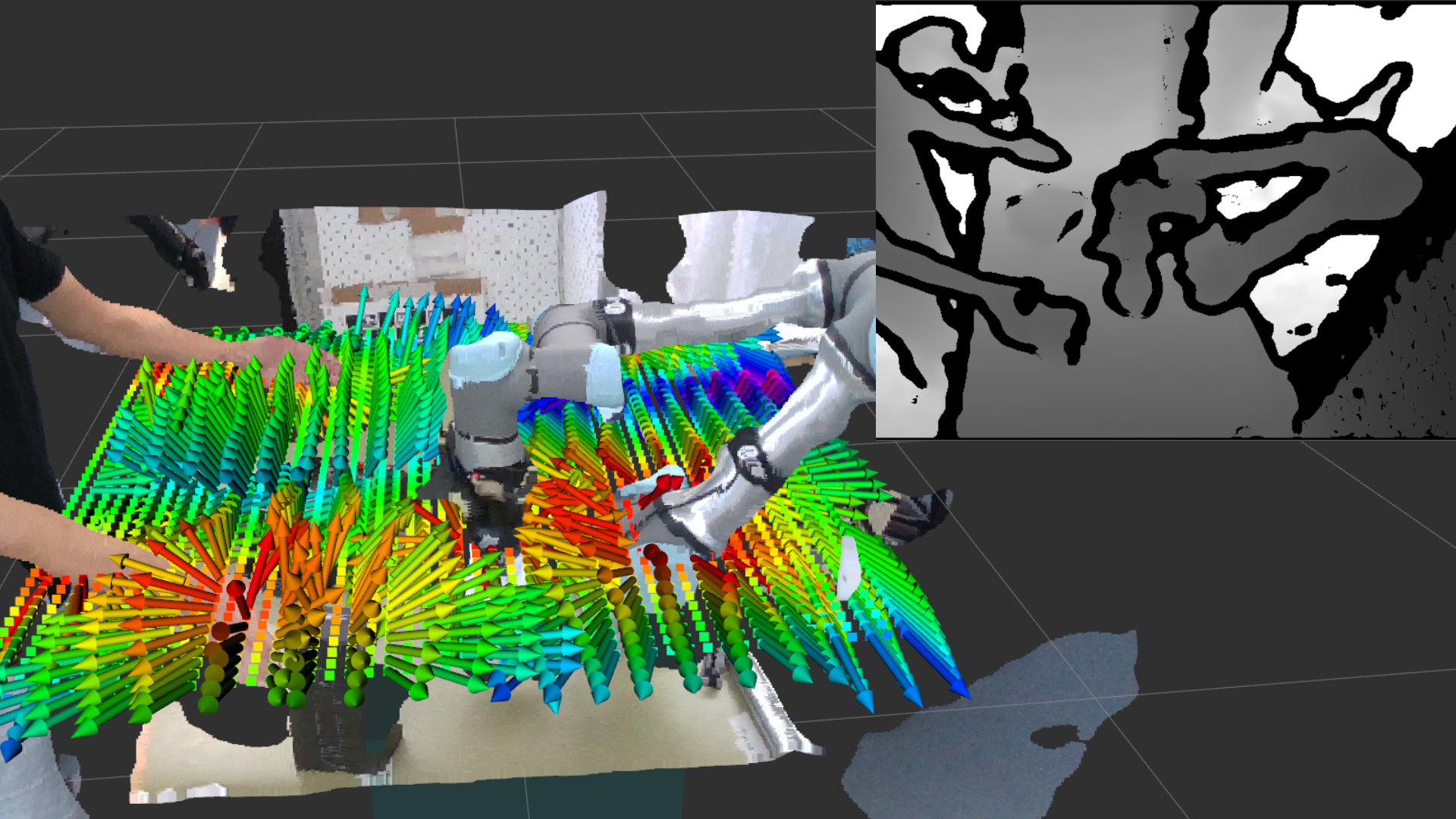

[RA-L 2024] IDMP: Interactive Distance Field Mapping and Planning to Enable Human-Robot Collaboration

Venue: IEEE Robotics and Automation Letters (RA-L), 2024

Authors: Usama Ali*, Lan Wu*, Adrian Muller, Fouad Sukkar, Tobias Kaupp, Teresa Vidal-Calleja

Note: * Co-first authors with equal contribution

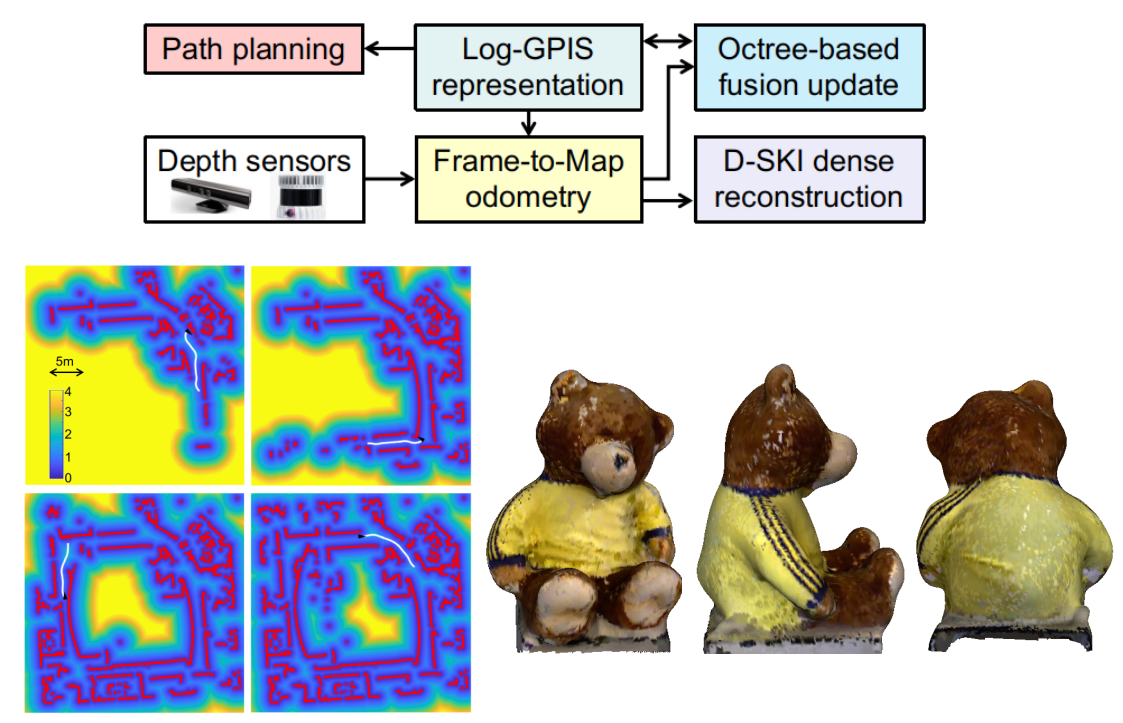

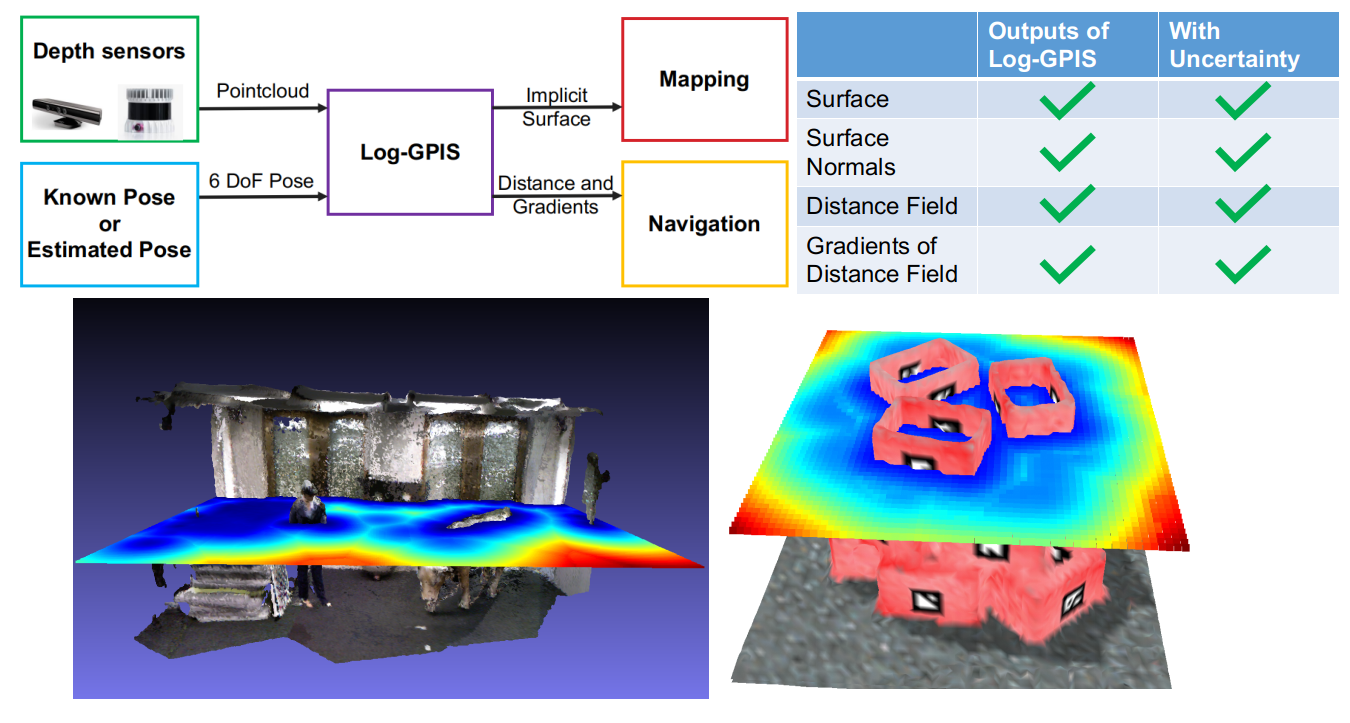

[T-RO 2023] Log-GPIS-MOP: A Unified Representation for Mapping, Odometry and Planning

Venue: IEEE Transactions on Robotics (T-RO), vol. 39, pp. 4078–4094, 2023

Authors: Lan Wu, Ki Myung Brian Lee, Cedric Le Gentil, Teresa Vidal-Calleja

[RA-L 2021] Faithful Euclidean Distance Field from Log-Gaussian Process Implicit Surfaces

Venue: IEEE Robotics and Automation Letters (RA-L), vol. 6, no. 2, pp. 2461–2468, 2021

Authors: Lan Wu, Ki Myung Brian Lee, Liyang Liu, Teresa Vidal-Calleja

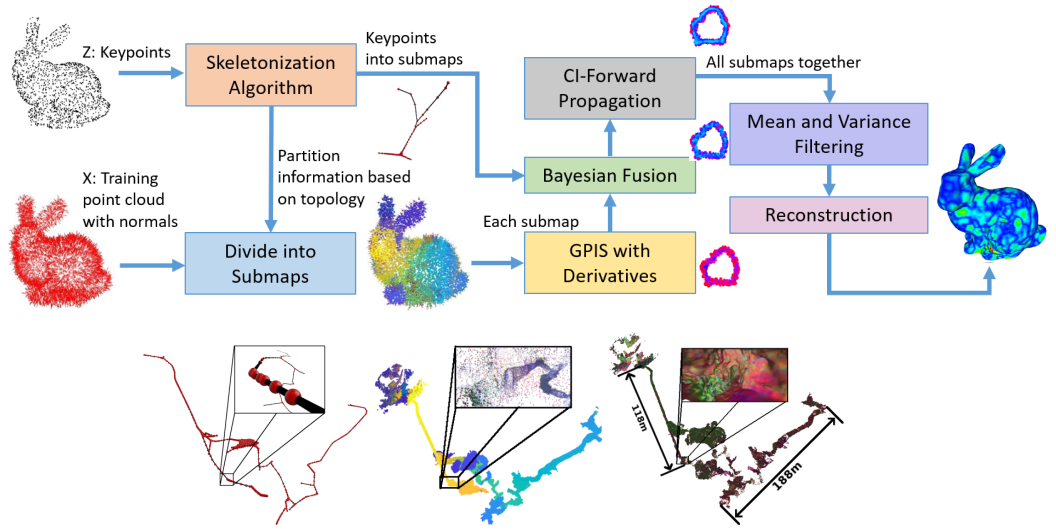

[RA-L 2020] Skeleton-Based Conditionally Independent Gaussian Process Implicit Surfaces for Fusion in Sparse to Dense 3D Reconstruction

Venue: IEEE Robotics and Automation Letters (RA-L), vol. 5, no. 2, pp. 1532–1539, 2020

Authors: Lan Wu, Raphael Falque, Victor Perez-Puchalt, Liyang Liu, Nico Pietroni, Teresa Vidal-Calleja

Robotic Projects I Have Contributed To (2020–2025)

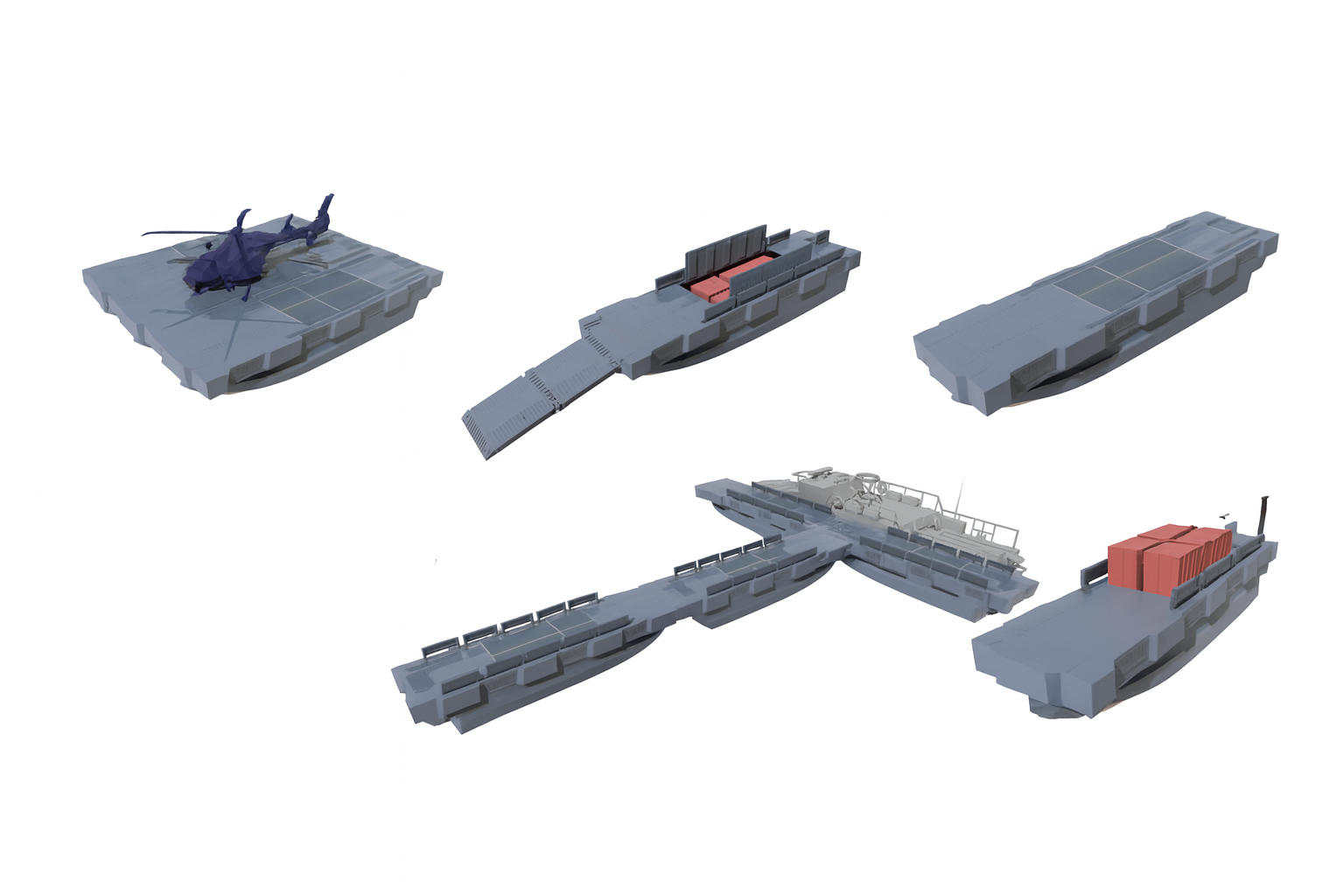

Autonomous Uncrewed Surface Vessel (USV) – Perception, Mapping, and Multi-modal Fusion

In this defence-oriented autonomous marine project, I was responsible for the perception and mapping stack of an uncrewed landing craft / autonomous boat platform. My work focused on building a robust multi-modal perception system integrating LiDAR, sonar, and cameras; developing 3D mapping and environment modelling for reliable situational awareness on water; and providing real-time scene understanding (obstacle detection, shoreline and infrastructure mapping, and environment reconstruction) to support downstream decision-making and planning. The perception–mapping pipeline was designed to operate under challenging marine conditions and formed the foundation for safe autonomous navigation.

Source: Navantia Australia

Source: Navantia AustraliaBionic Visual–Spatial Augmented Glasses for People Who Are Blind or Vision-Impaired

Develop a bionic visual–spatial augmented glasses system designed to support blind and vision-impaired users in understanding their surrounding 3D environment. My work focused on building a real-time perception and representation pipeline capable of converting camera and imu information into intuitive spatial cues. Using probabilistic distance-field modelling and scene understanding techniques, the system provides users with spatially meaningful feedback (e.g., obstacle proximity, geometry of indoor environments), enabling safer and more confident navigation.

Source: UTS Techlab

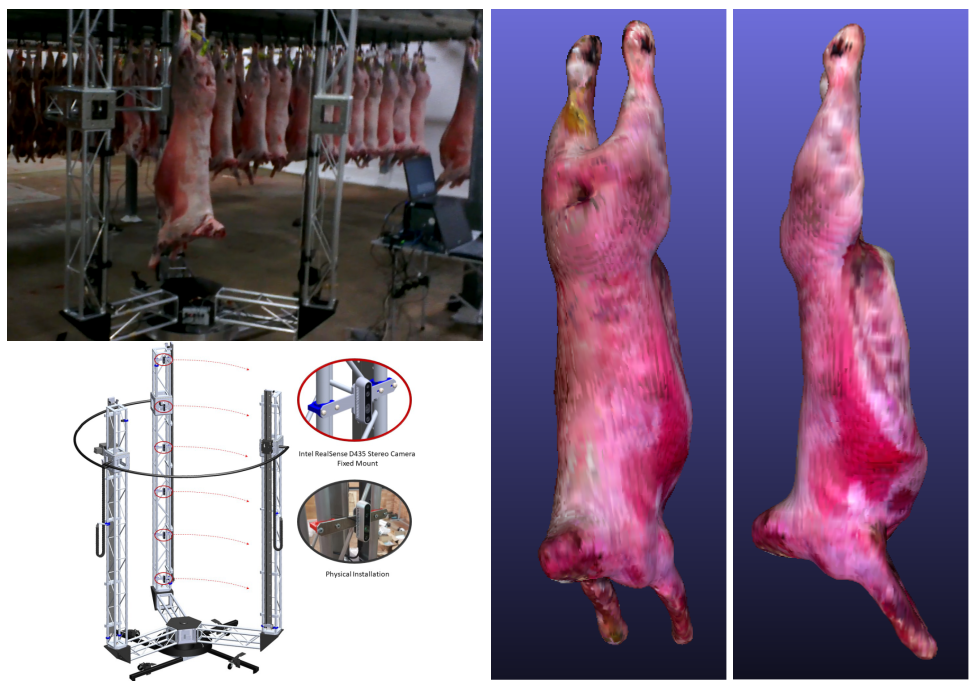

Source: UTS TechlabSurface Reconstruction for the Meat and Livestock Project

I applied my research outcomes in the meat and livestock project for trait estimation, funded by the Australian Government Department of Agriculture&Water Resources as part of its Rural R&D for Profit programme, Meat and Livestock Australia under the Grant V.RDP.2005. By using the probabilistic mapping approaches, my main objective was to convert and process noisy and incomplete point clouds and depth images of non-rigid livestock and rigid carcass into 3D meshes for further processing.

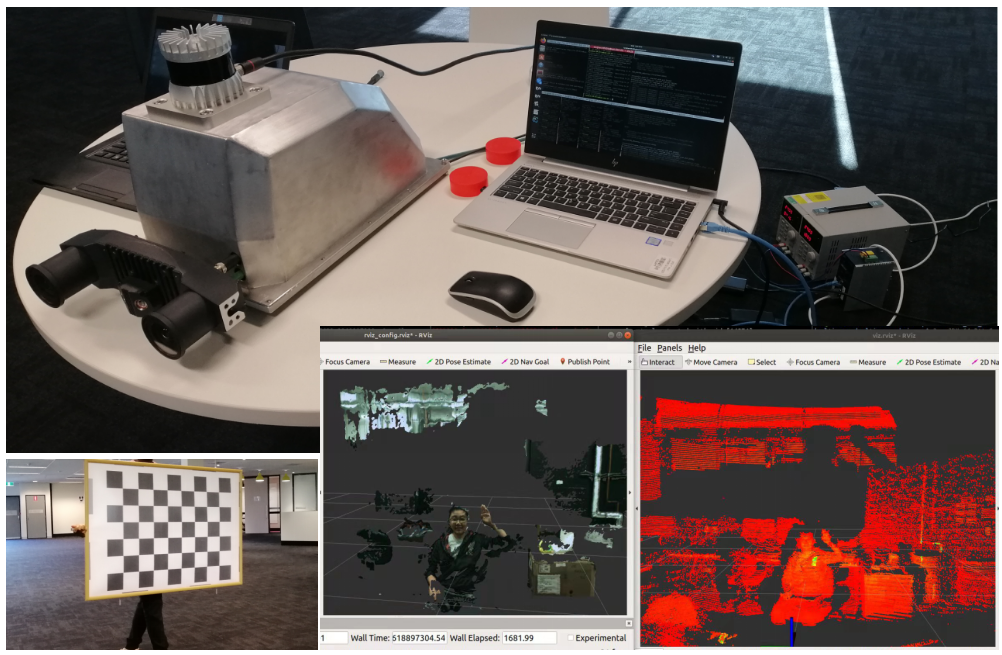

Multi-sensor Perception, Calibration and Synchronisation for a Fast Off-road Vehicle

UTS’ next generation of fast-wheeled robots. UTS built FORV, a Fast Off-Road Vehicle for Defence Applications and I was responsible for establishing the multi-sensor fusion platform for mapping and navigation. Calibrating and working across several visual sensors including a binocular camera, a 3D LiDAR and an inertial measurement unit, we managed to have a data collection and synchronisation system based on NVIDIA Jetson and ROS.

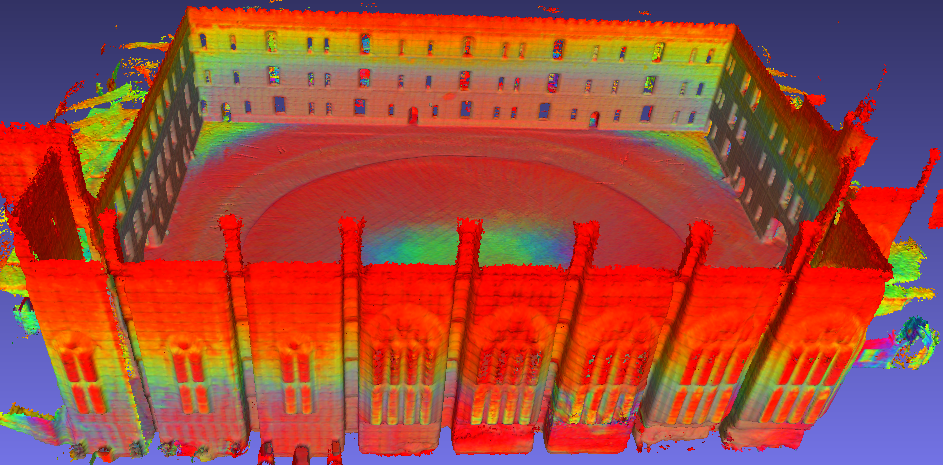

Simultaneous Localisation and Mapping for Locomotive No.1

I had the opportunity to work with my colleagues to measure the digital representation of the historical train Locomotive No. 1 in the Powerhouse Museum. The datasets were collected by LiDAR sensor and RGB-D camera along with an IMU sensor. We operated the sensors around the train to have high-quality observations and then utilise various SLAM frameworks to produce an accurate surface of the train. Moreover, I generated the colored point cloud of the full train using RGB information for LiDAR measurements.

Previous Industry Experience

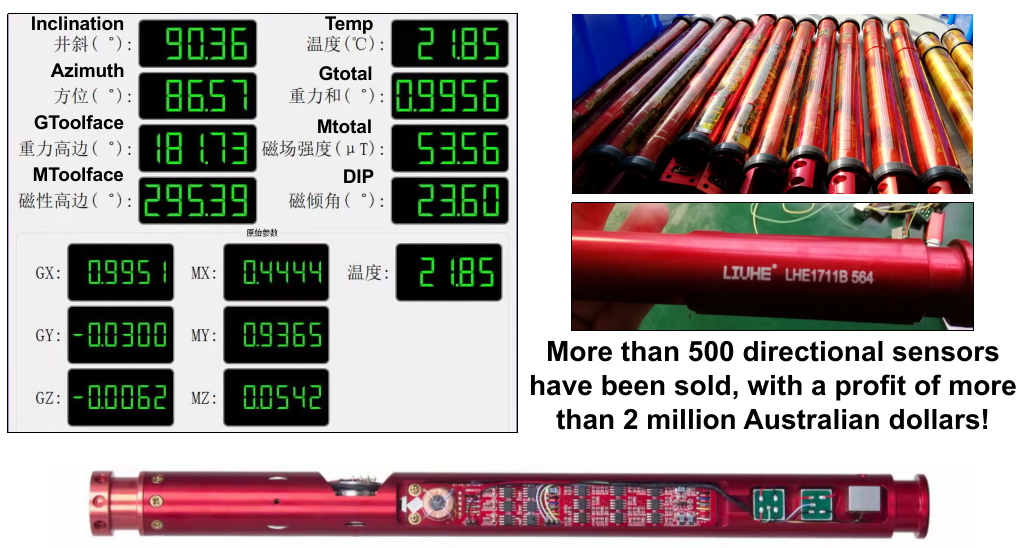

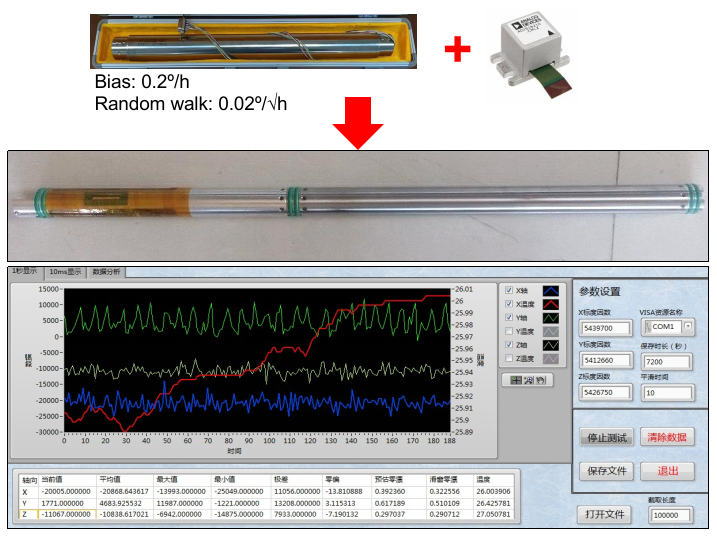

Between completing my bachelor degree and beginning my PhD, I led an R&D team in the drilling technology industry, where I was responsible for both hardware and software design. During this period, I contributed to the development and commercialisation of multiple sensing products.

Directional Sensor Using Accelerometers and Magnetometers for Underground MWD

North-Seeking Gyro Using Accelerometers and Gyroscopes for Underground MWD

Other Commercialised Sensors and Electronics

Contact

iriswu076@gmail.com

If you’d like to join my group as a PhD, Master by Research student, CSC scholar, or intern, feel free to email your CV :)